In this article, we test all of the currently available apps for checking text for AI writing in order to find the best AI content detection tools.

AI has developed greatly over the years, but one of the greatest leaps came a few years ago when researchers developed “transformer” neural networks with the concept of “attention,” which allows them to consider context in text completion.

Out of this research came Open AI and the GPT text generation models. GPT has improved dramatically. It’s now in its third generation, GPT-3, which produces such good text that it’s nearly impossible for an expert to distinguish from human-written text.

Website investors are increasingly aware of the importance of AI generated content in the industry. And when you’re buying a website, it’s becoming vital to check for AI generated content.

But how do you detect AI written content? We will test all of the current AI content detection tools to determine which is the best. And we’ll answer the question: does any AI content detection tool actually work?

Table of Contents

- How we rate AI content detection

- AI content detection tools reviews

- PoemOfQuotes AI Content Detector

- GLTR (giant language model test room)

- GPT-2 Output Detector by HuggingFace

- Key points

How we rate AI content detection

Along with a experts in AI and human psychology, we developed a suite of 3 testing datasets. These datasets are designed to test AI content detectors on their ability to distinguish between human and AI generated content.

The first two datasets compare the latest transformer text generation models to human-written text. We use several different models in this test, weighted by their popularity and accuracy. The most common models in the dataset are GPT-3’s latest model, Davinci-003, and AI21’s JURASSIC-1. Each dataset contains 100 samples.

The third dataset is a “sanity check” which compares human-written text to GPT-2 generated text. Since GPT-2 generated text is pretty easy to distinguish from human-written text, we expect all tools to score highly on this one. This dataset contains 10 samples.

AI content detection tools reviews

Below are our reviews of all of the top AI content detection tools available on the market today.

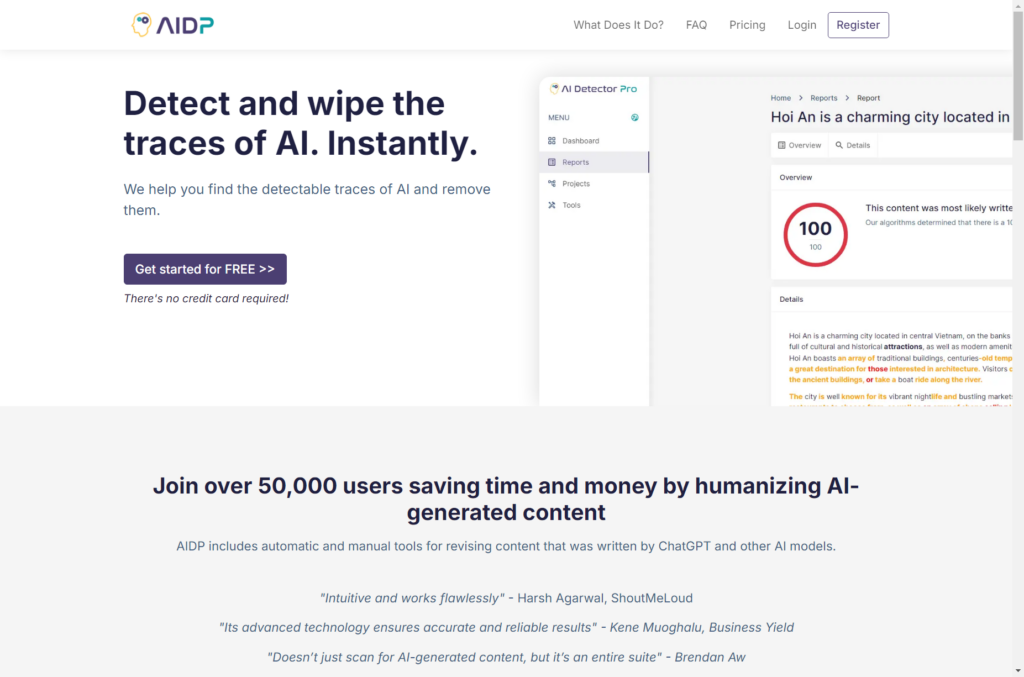

AI Detector Pro

AI Detector Pro scored the highest on our tests.

In total, it scored 100%. It also is capable of AI humanization.

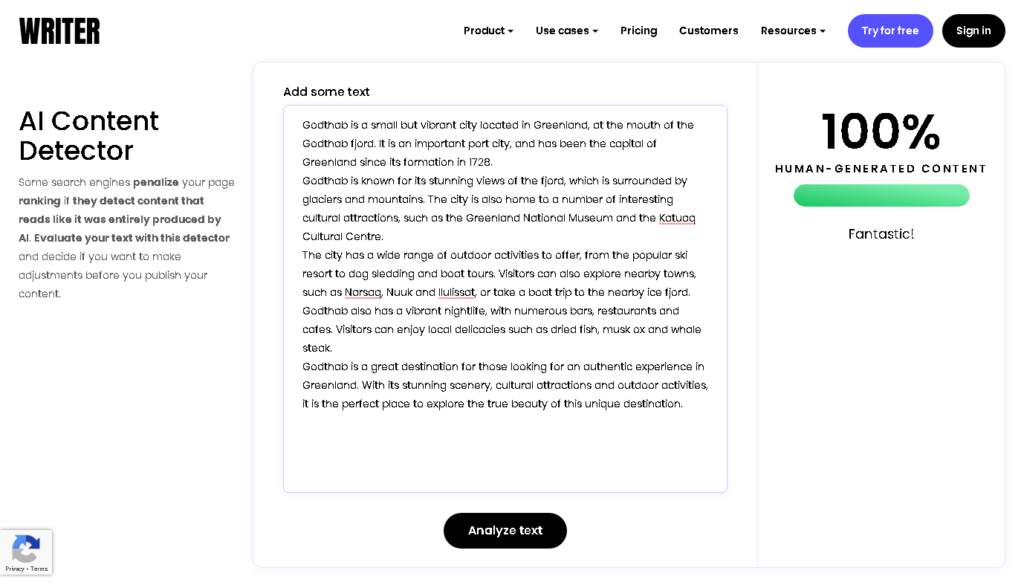

Writer.com AI content detection: GPT

The first tool that we tested was Writer.com’s AI content detection tool. This tool is free and openly available. Writer.com’s AI content detection tool gives you a percentage score between 0 and 100% for the likelihood that a human wrote the article. That means if the score is over 50%, the tool believes a human wrote the article. And if the score is under 50%, the tool believes AI wrote the article.

Writer.com AI content detection test scores

In our tests, however, Writer.com’s AI content detection provided a score of significantly over 50% for every sample in dataset 1 and 2. Surprisingly, the tool often gave a score of 100% for AI generated content. It rarely returned a score of 100% for samples that were actually written by a human.

Because each of the first two datasets had 50% human generated content and 50% AI generated content, the Writer.com AI content detection tool got a score of 50 on both datasets.

On the third dataset, with only GPT-2 samples, the Writer.com AI content detector also returned a score of over 50% for every sample. It returned scores of 90% to 100% for even the most nonsensical content generated by GPT-2.

| Dataset | Score |

| Dataset 1 (out of 100) | 50 |

| Dataset 2 (out of 100) | 50 |

| Dataset 3 (out of 10) | 5 |

In fact, we weren’t able to get the Writer.com AI content detector to come up with a score of less than 50% for anything we put in.

This tool isn’t particularly useful in practice, given that it seems to think whatever you put in is human-written content.

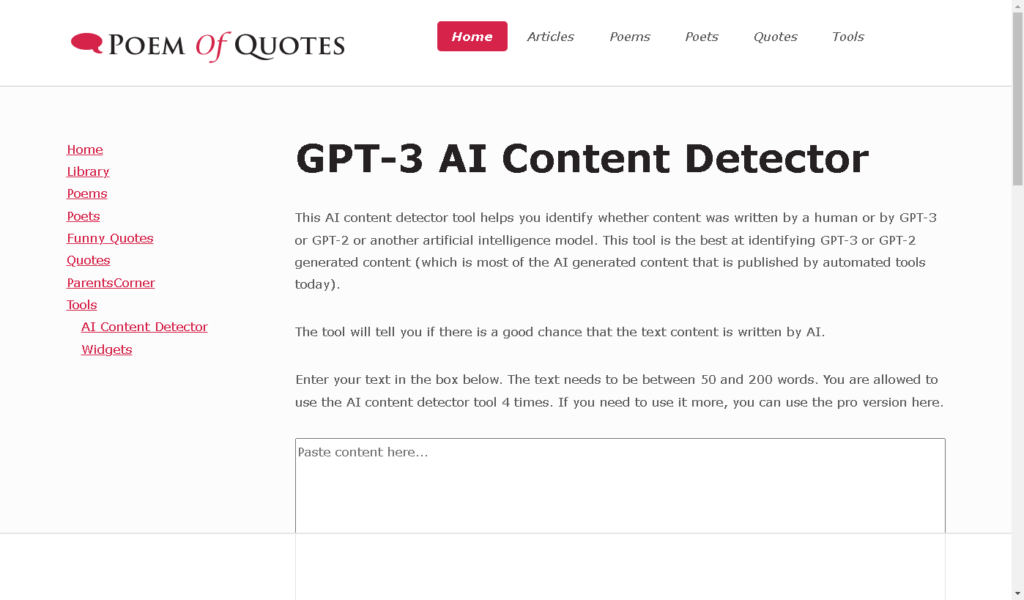

PoemOfQuotes AI Content Detector

The PoemOfQuotes AI Content Detector isn’t much to look at but it seems to work pretty well.

The tool is free and requires no registration or login, but it does limit users to 4 queries. You can only enter between 50 and 200 words. Anything more than that, and you’re going to need to split the text up into separate batches.

When you enter text, the tool gives a very simple “this was generated by a human” or “this was generated by AI” message. There’s no configuration or confidence interval given.

PoemOfQuotes AI Content Detector test scores

The PoemOfQuotes AI Content Detector scored very well on our tests.

The scores are:

| Dataset | Score |

| Dataset 1 (out of 100) | 87 |

| Dataset 2 (out of 100) | 89 |

| Dataset 3 (out of 10) | 10 |

The PoemOfQuotes.com AI Content Detector tool is able to distinguish very well between human and AI generated content. On the two datasets using the latest AI models, dataset 1 and dataset 2, the tool scored close to 90%.

The only text the tool was consistently unable to distinguish was generated by the JURASSIC model. It was nearly (but not totally) perfect on human and GPT-3 generated text.

GLTR (giant language model test room)

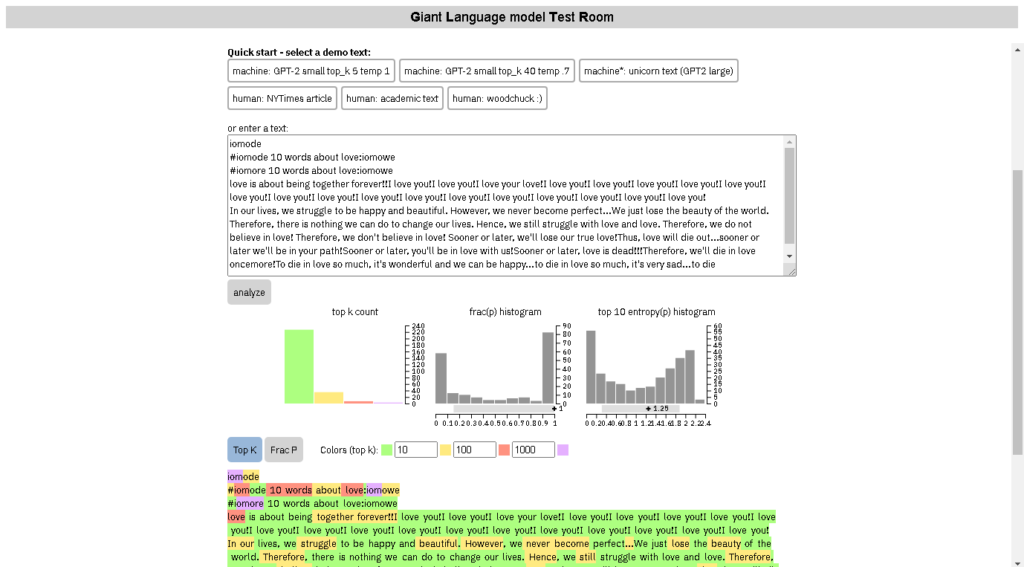

MIT, Harvard, and IBM worked together to develop GLTR. The GLTR tool is part of a research project, which is designed to provide a footprint of an AI natural language processing algorithm.

Here’s what the researchers wrote about GLTR:

The aim of GLTR is to take the same models that are used to generated fake text as a tool for detection. GLTR has access to the GPT-2 117M language model from OpenAI, one of the largest publicly available models. It can use any textual input and analyze what GPT-2 would have predicted at each position. Since the output is a ranking of all of the words that the model knows, we can compute how the observed following word ranks. We use this positional information to overlay a colored mask over the text that corresponds to the position in the ranking. A word that ranks within the most likely words is highlighted in green (top 10), yellow (top 100), red (top 1,000), and the rest of the words in purple. Thus, we can get a direct visual indication of how likely each word was under the model.

GLTR documentation

According to the research, GLTR is able to distinguish GPT-2 generated text from human-written text. But how well does it work on the latest GPT-3 and other current generation AI models?

GLTR on GPT-3

Again, we used 3 datasets of sample text on the GLTR tool. The first two are GPT-3 and JURASSIC-1 models, and the last one is GPT-2. The GLTR tool is a bit more complicated to test, given that it doesn’t produce a simple score like Writer.com’s AI content detection tool.

With the GLTR tool, you need to do some interpretation of the result. The way the GLTR tool works is that it uses the GPT-2 model and calculates the likelihood of the next word in the sample. If the next word in the sample is what the GPT model would choose, it highlights the word in green. If the GPT model is unlikely to generate the next word, it highlights the word in a darker color (red or purple, depending on the probability).

So in theory, a fully GPT-2 generated article should be mostly green. And an article written by a human should be a mix of different colors, including a lot of red and purple.

The GLTR tool provides a handy histogram of the ratios of the top probability words to next highest probability word. Using trial and error, we found that average ratios of .75 or above were likely to be GPT generated text, and ratios below were likely to be human generated text.

Using this rubric, we ran all three datasets through GLTR.

GLTR test scores

The scores are here:

| Dataset | Score |

| Dataset 1 (out of 100) | 82 |

| Dataset 2 (out of 100) | 87 |

| Dataset 3 (out of 10) | 10 |

Unsurprisingly, GLTR was very accurate on dataset 3. Dataset 3 is made up of GPT-2 text completions, so it should be relatively easy to detect.

What was more surprising is how well GLTR did on datasets 1 and 2. Both of these datasets were mostly made up of GPT-3 text, which is much more advanced than GPT-2. In fact, the only samples that GLTR routinely didn’t get right were samples generated by JURASSIC-1, a model that is not based on GPT.

The results show that if you’re looking to determine if something is written by AI, GLTR is a good option. And you’re more likely than not to get a correct answer.

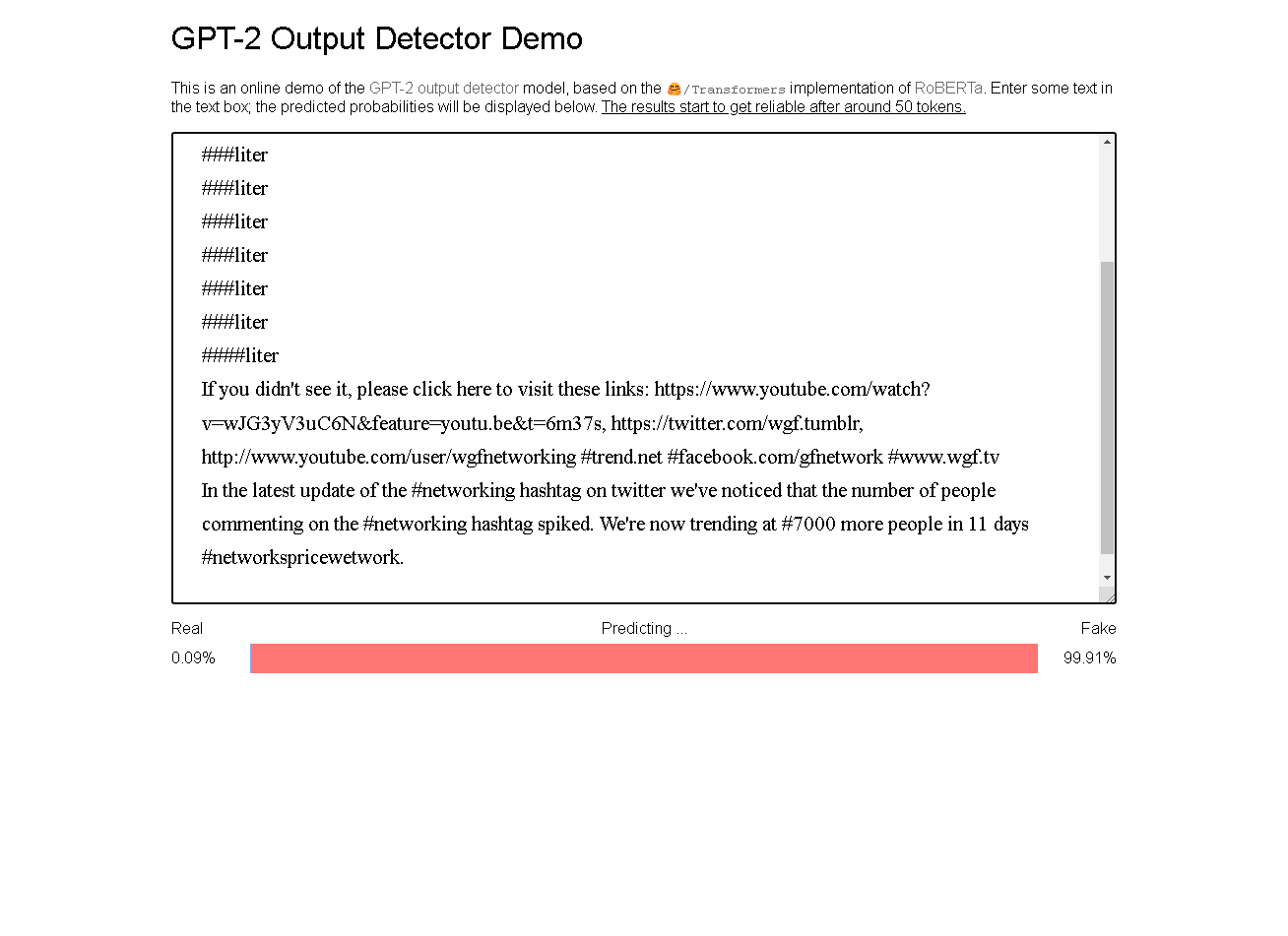

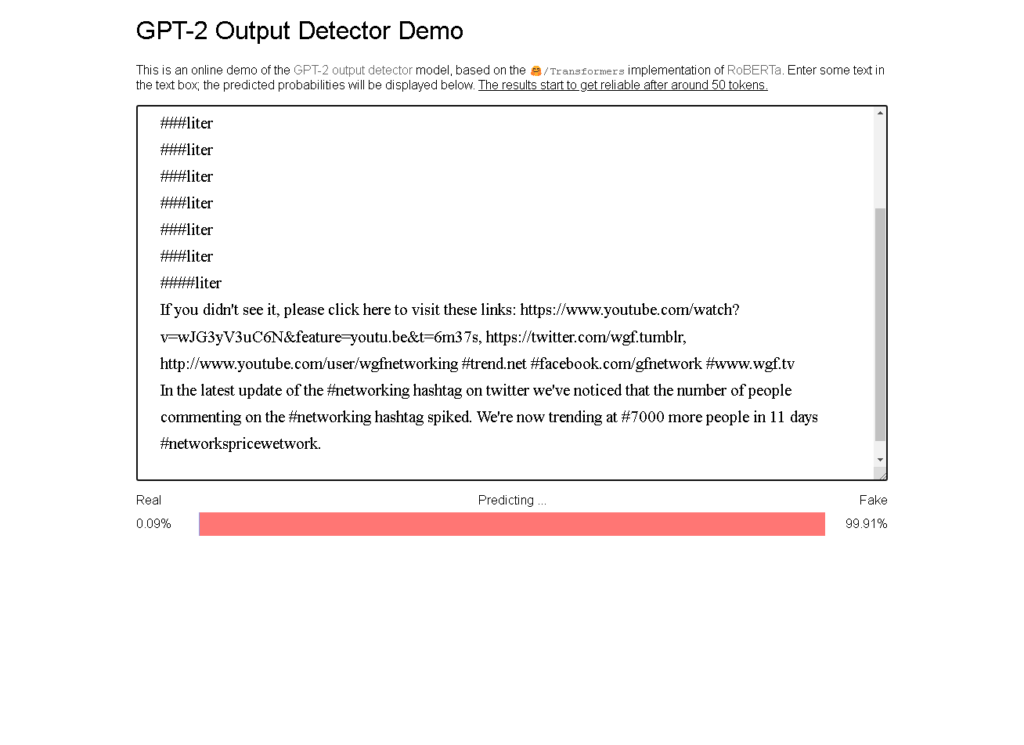

GPT-2 Output Detector by HuggingFace

Anyone in the AI community knows the name HuggingFace, because they are the creators of the transformers Python package, which enables a lot of the natural language processing implementations available.

HuggingFace has a GPT-2 Output Detector tool that is supposed to be capable of reasonably good AI content detection, if you provide it with more than 50 tokens. (Note: tokens are a complicated concept, but for a gross oversimplification, you can think of them as words). The GPT-2 Output Detector works best on GPT-2 data, so we would expect it to work well on dataset 3.

However, it is not clear whether the tool works as well as GLTR on GPT-3 data. So let’s go ahead and look at the results:

GPT-2 Output Detector test scores

The scores are:

| Dataset | Score |

| Dataset 1 (out of 100) | 88 |

| Dataset 2 (out of 100) | 91 |

| Dataset 3 (out of 10) | 10 |

HuggingFace’s GPT-2 Output Detector performed very well on all three datasets. It’s scores are slightly higher than the scores received by GLTR, probably because GLTR required us to determine the probability boundary between AI and human generated text.

In contrast, GPT-2 Output Detector provides its own boundary, so user error is minimized. In reality, it’s likely that GLTR and the GPT-2 Output Detector both return essentially the same results, but the GPT-2 Output Detector is more clear about how to interpret those results.

If you’re going to choose one of the tools on this page for AI content detection, this is a good one.

Key points

In this article, we reviewed 3 of the most popular AI content detection tools. These tools are designed to identify AI generated content.

Perhaps surprisingly, these tools can work very well on the latest GPT-3 generated content, meaning that it’s possible for anyone to determine fairly accurately if content is AI generated.

One important caveat: none of these tools does a good job of identifying content generated by alternative AI models. All of these tools failed when used on text samples generated by the JURASSIC-1 model, so they’re not foolproof.

Nevertheless, what we have found is that it is possible to identify AI generated text, and in fact, it’s not particularly difficult.