Is it possible for an expert to distinguish between a human and GPT-3? Or has AI gotten so good that there is no way to distinguish?

OpenAI’s transformer models, and their descendants, such as GPT-NEO and GPT-J, are becoming more and more skilled at impersonating a human. The quality of their writing has gotten so good that it seems like it may be impossible to tell the difference between a human writer and GPT-3. This is especially true with the latest models, such as Davinci-003 and Jurassic-1.

There is a burgeoning industry of companies attempting to identify whether something is written by a human or an AI bot. And we have seen that good AI tools can distinguish at least some of the latest AI content from human generated content.

In this article, we set up an experiment to determine whether it’s possible for a human to distinguish between a human and the latest GPT-3 AI.

Table of Contents

- GPT-3 for non-techies

- An experiment to distinguish between a human and GPT-3

- The result: is it possible to distinguish between a human and GPT-3

- Understanding the result

- Key points

GPT-3 for non-techies

GPT-3 is an advanced AI technology that can generate human-like text and understand natural language. It is an example of a large-scale language model that is trained on a vast amount of data. Unlike traditional machine learning models, GPT-3 does not require large amounts of labeled data. Instead, it learns from the data it receives, enabling it to generate human-like text.

GPT-3 uses a method called transfer learning, which means it can use knowledge learned from one application and apply it to another. This enables GPT-3 to quickly learn a variety of tasks. GPT-3 works by taking in an input sentence and predicting the next word in the sentence. It does this by looking at the sequence of words in the sentence and using a neural network to generate a probability distribution for the next word.

The probability distribution is based on the words that are most likely to appear next in the sentence. GPT-3 is able to generate human-like text because of the huge training dataset it uses. By training on this much data, GPT-3 is able to learn the patterns and nuances of natural language. This makes it possible for GPT-3 to generate text that is close to what a human would write.

GPT-3 has opened up a world of possibilities for non-technical users. The technology can generate text from a single sentence, create stories, generate code, and much more. GPT-3 also works for a variety of applications, such as chatbots, summarizing text and generating answers to questions.

For website investors, GPT-3 provides a new, low-cost method of generating content. But we must be careful not to get penalized by Google. The question is: can we as humans identify AI written content to ensure that our writers don’t sell us junk?

An experiment to distinguish between a human and GPT-3

In order to understand whether we as website investors can tell whether AI wrote a article, we set up an experiment. The main question we will ask is: “is it possible for a human expert to distinguish between a human and GPT-3?”

A top English writer with enough time will writer clearer, more syntactically correct text than GPT-3. The problem is, very little web content is written by a top English writers with plenty of time. Content writers are often non-native English speakers. And well-educated English writers often have short deadlines that result in imperfect content.

Our experiment

Given all of these parameters, our experiment will be based on this question: can a human expert distinguish between a human and GPT-3 for single 150-400 word articles.

To perform the experiment, we hired an AI expert and an expert in human psychology. We gave each expert 100 different writing samples, 50% of which were generated by AI text completion models (40% Davinci 003 from GPT-3 and 10% JURASSIC-1) and 50% of which were written by a human. We split the models to determine if individual models were distinguishable.

The results appear in the next section.

The result: is it possible to distinguish between a human and GPT-3

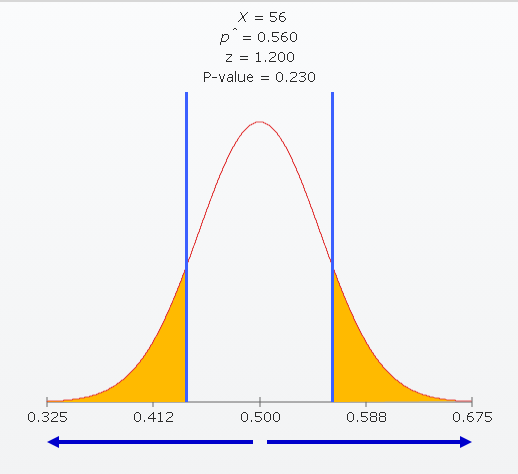

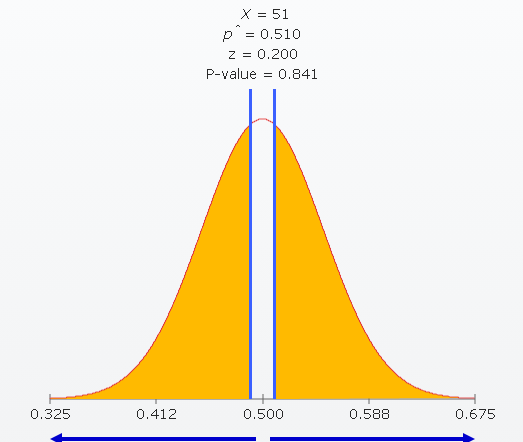

After running the experiment, we found the results to be interesting. The AI expert was able to get 56 out of the 100 samples correct, while the psychology expert got 51.

Both of these results were not statistically significant:

The outcome of the experiment was that neither expert was able to distinguish between the AI and the human in a statistically significant way.

Understanding the result

It’s important to understand what the result suggests and what it doesn’t.

What we found was that neither expert was able to distinguish between a human and GPT-3 in a statistically significant way. But we must remember that the data was presented as single articles on random topics. As on the Internet, the articles written by humans were not from the same author, but from a wide variety of authors. And the experts did not have a chance to query the AI directly.

In planning this experiment, we did a few additional non-scientific experiments. From those, we noticed that the experts became better at distinguishing between the AI and the human when they got multiple articles from the same human. Humans tend to develop a writing style of their own, so when the experts saw multiple articles from the same human, they started to see a pattern.

We also noticed that when the experts had the opportunity to query the AI or human, they were much better at distinguishing. By asking enough questions, they could identify whether they were communicating with a human or AI.

Key points

It’s getting more and more difficult to distinguish between a human and GPT-3 when purely looking at an article on the Internet. Although AI models have shown promise in distinguishing, humans have more trouble. In our experiment, we found that neither of our experts had any statistically significant ability to identify the AI.